This post is structured as follows.

- The Problem

- Dynamic AI Model Deployment and Controlled Experimentation

- Dynamic Model Rules and Adaptive Decision Control

- Step 1: A Fast, Synchronous Quality Gate (Fail Fast, Locally)

- Step 2: AI Semantic Eligibility Check (Relevance Before Intelligence)

- Step 3: Plagiarism Detection (Protecting Originality and Trust)

- Step 4: Promotional Abuse & Intent Detection

- Step 5: AI-Driven Content Refinement (Improvement Without Distortion)

- Step 6: Semantic Similarity Search (Contextual Linking at Scale)

- Auditing the Entire Workflow

- Conclusion: Building AI Systems That Survive Reality

Publishing user-generated content at scale looks easy at first. You accept a post, you review it, you hit publish. But once you care about reliability, quality, and trust as much as you care about growth, it stops being simple.

On SiteReq, posts aren’t “drafts sitting somewhere in the admin panel.” They’re public pages. People read them. Google indexes them. And whether the platform feels credible or not is heavily influenced by what gets published (and what accidentally slips through).

So when something goes wrong—a broken link, a low-quality edit, a weird formatting regression—it’s not hidden. It’s live. And fixing it usually costs more than the original review would have.

That’s why the real challenge wasn’t approving faster. It was approving faster without disrupting what was already live.

Manual reviews were slow and inconsistent, and as submissions increased they became impossible to keep up with. But fully automating approval had its own risks: accidental regressions, promotional abuse, topic drift, and “quiet” SEO damage that you only notice weeks later.

The scary part is that one bad update can invalidate a post that was previously clean, forcing rollbacks and reactive fixes. And the system had to deal with all of this without ideal conditions:

- Limited budget

- Asynchronous workloads

- Very inconsistent content quality

- A constant tradeoff between speed and safety

So the requirement wasn’t “pass checks.” The requirement was: preserve availability, isolate risk, and make recovery possible when something inevitably goes wrong.

This post walks through how I tackled that by building a versioned, non-disruptive AI content approval pipeline—one where new versions are evaluated independently, the live version remains stable, and quality gates can be enforced without slowing the platform down or breaking trust.

The Problem

SiteReq is a blogging-as-a-service platform where users can publish completely free guest posts under their own blogging spaces. Once a post is published, it becomes a real public-facing asset. It affects readers, and it affects search visibility, which means it affects the platform as a whole.

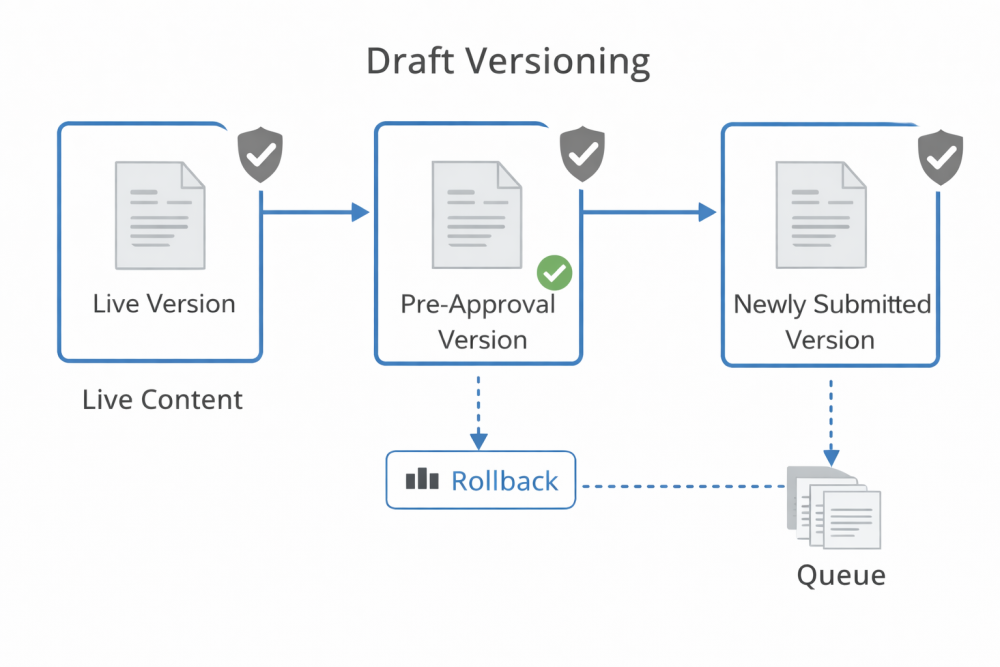

To keep the platform stable, posts on SiteReq are versioned. Every submission or edit creates a new version that has to be approved before it goes live. While that version is being reviewed, the previously approved version keeps serving readers with zero interruption.

This model protects availability (and prevents “oops” updates from breaking live pages), but it comes with operational complexity. In the beginning, approvals were completely manual. I’d review the content, verify structure, check links, sanity-check intent, and approve. On average, that took about 30 minutes per post.

That’s fine when submissions are rare. It breaks down as soon as usage grows.

There was also a very real availability constraint: approvals could only happen during my working hours. A post submitted late at night would sit until the next day. If I had a busy day at my full-time job, that “next day” often turned into two. Backlogs built up, and turnaround time became unpredictable.

At that point, speed wasn’t the main issue anymore. The bigger issue was reliability under real-world constraints. Any solution had to improve all the following at once:

Operational overhead — reduce the time sink of repetitive manual reviews

Approval availability — approvals shouldn’t depend on me being online

Productivity — reclaim time for actual platform improvements instead of moderation backlog

Cost efficiency — the platform is self-funded, so every architectural choice has real cost impact

Solving one of these in isolation wouldn’t help. The pipeline had to improve all of them without compromising availability, content quality, or trust.

Dynamic AI Model Deployment and Controlled Experimentation

One thing I didn’t want was to hard-wire the platform to a single model decision and call it “done.” Automating approvals with AI isn’t a one-time choice. Model quality changes, costs shift, latency varies, and behavior evolves over time.

So from the start, I treated model selection as a runtime concern, not something that should require code changes and redeployments.

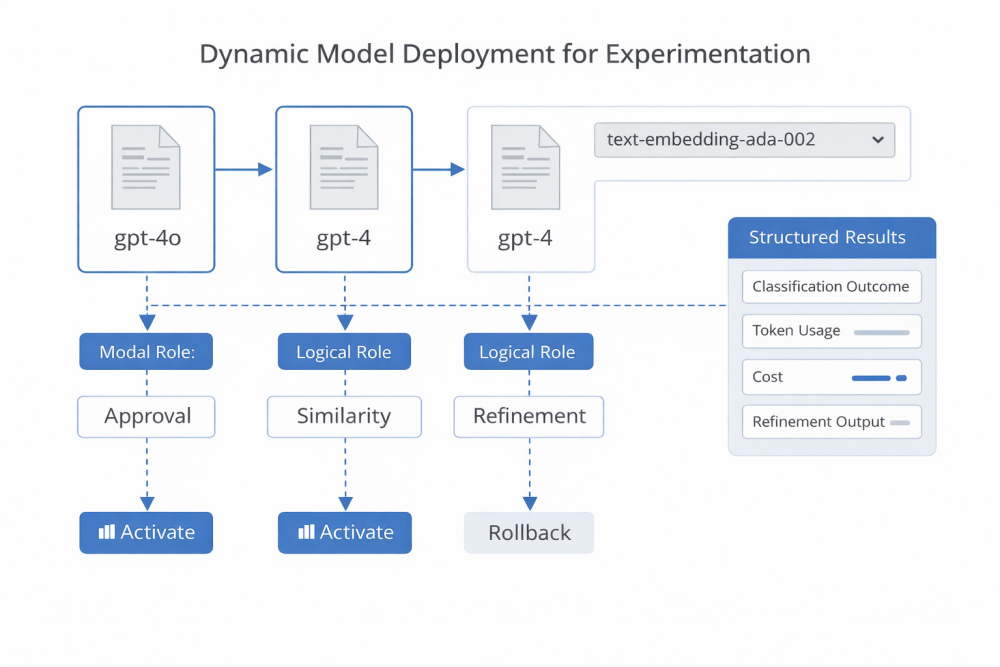

Each AI capability in the pipeline—topic eligibility, anchor classification, refinement, semantic similarity—maps to a logical role, not a hard-coded deployment. That role can be pointed to different Azure OpenAI models or embedding deployments without changing application code or redeploying the system.

That made experimentation practical and safe. I could:

Switch between GPT-4o, GPT-4, and lighter models depending on the step

Compare embedding models for eligibility and similarity

Reserve higher-cost models for the steps where they genuinely added value

Roll back quickly if a model started producing unstable results

The important part: model changes never affected live content. Because approvals operate on isolated versions, experimenting with a new model only influences future approvals—not content that’s already being served to readers.

Each execution also captured structured results (outcomes, token usage, and the reasons behind pass/fail decisions). Over time, that created a feedback loop where model behavior could be evaluated based on data, not gut feeling.

And that mattered because the system had to work under real constraints: a self-funded budget, unpredictable workloads, and user-generated content where edge cases are basically guaranteed.

The goal wasn’t to find a “perfect model.” The goal was to build a system that could adapt safely as models and costs change.

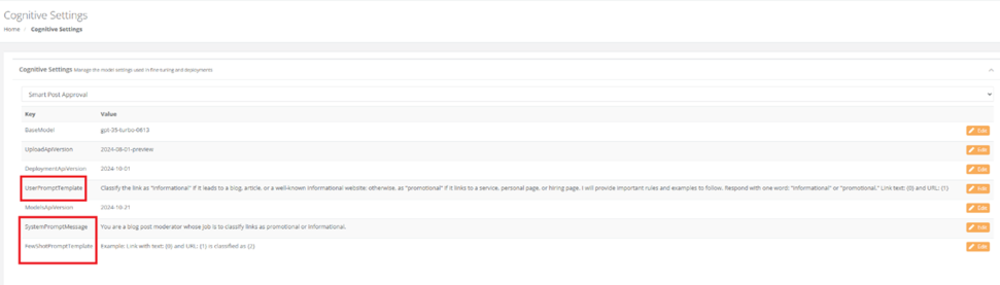

Model Deployment Interface (Operational View)

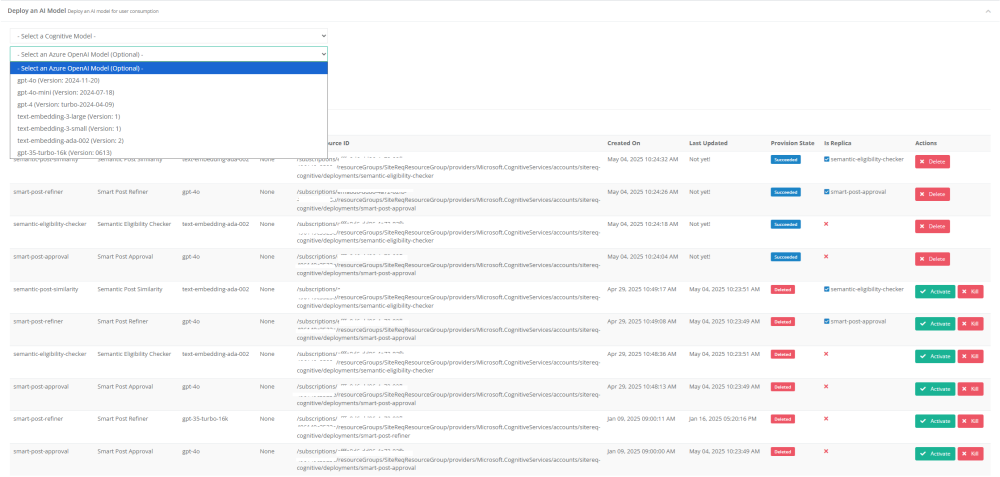

To make this practical, model configuration lives as an operational capability rather than a development task. Each logical AI role can be mapped to a deployment, activated/deactivated, and replaced independently.

That separation made it possible to iterate quickly:

Test newer model versions without downtime

Compare performance across models over time

Introduce higher-cost models gradually, only where they proved their value

More than anything, this enforced a core principle I kept coming back to: AI decisions must be observable, reversible, and isolated from live user impact.

Dynamic Model Rules and Adaptive Decision Control

Picking a model isn’t enough, because model behavior isn’t static. The same model can give very different results depending on how it’s guided and constrained.

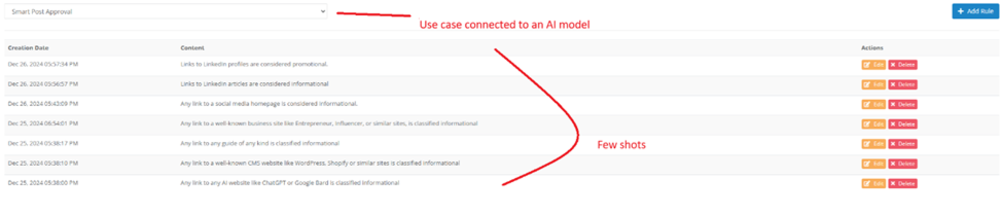

That’s why each AI capability in the pipeline is governed by a dynamic rule set that’s managed independently from the model itself.

A rule set is basically the decision contract for a call. It includes:

A system prompt that sets boundaries and non-negotiable constraints

A user prompt template that structures how content is presented

A set of few-shot examples showing what “good” and “bad” decisions look like

These rules are versioned and editable, and they evolve based on what I observe in production. That separation matters because models are probabilistic. Rules are how you shape that probability into something repeatable and safe.

Rules as a Feedback-Driven Control Layer

Every model execution produces structured outcomes that can be inspected later: the raw output, the final decision the pipeline took, token usage, and whether the decision was later confirmed or corrected.

When a result turned out to be wrong, I didn’t “patch around it.” I treated it as a signal. Sometimes the right fix was a tighter constraint in the system prompt. Sometimes it was a better example. Sometimes it was narrowing the decision boundary.

Over time, the system improved without redeployments, retraining, or code changes. And because the rules apply only to future executions, previously approved content stays intact—so auditability and trust remain preserved.

Why Rules Were Treated as First-Class Configuration

Treating rules as first-class configuration (instead of embedding prompts inside code) gave the system a few practical advantages:

Isolation — changing anchor classification rules doesn’t accidentally affect eligibility or refinement

Reversibility — if a rule change degrades accuracy, I can roll it back immediately

Experimentation — competing rule sets can be evaluated against the same model over time

Cost control — clearer guidance reduces ambiguity and unnecessary token consumption

At this stage, the system also supported automated model fine-tuning. But as experimentation progressed, it became clear that rules and few-shots were the more strategic lever under real constraints—especially cost, iteration speed, and operational risk.

That decision deserves its own explanation, and I’ll come back to it later.

Step 1: A Fast, Synchronous Quality Gate (Fail Fast, Locally)

The very first thing that happens after a draft is submitted is intentionally simple and synchronous. Before queues, before AI calls, before anything expensive or long-running, the system runs a local quality check.

The goal here isn’t intelligence. It’s common sense.

AI moderation is powerful, but it’s also probabilistic and not free. Sending structurally broken or obviously incomplete content through an AI pipeline is a waste of both time and money. This first gate exists to stop bad inputs early and do it in a predictable way.

Content Normalization and Structural Safety

The first responsibility of this step is to get the content into a clean, predictable shape that the rest of the system can safely work with.

In practice, that means handling all the messy realities of user-generated HTML:

Normalizing malformed or unsafe markup

Enforcing a consistent heading hierarchy for basic SEO sanity

Converting code snippets into editor-friendly blocks

Ensuring images are structurally valid and lazy-loaded

Repairing or safely replacing broken elements instead of failing hard

This process is intentionally tolerant. Minor issues don’t block the submission. They’re fixed where possible and logged where they aren’t. Real user content is messy, and the platform has to be resilient to that reality rather than brittle.

Immediate SEO and Readability Checks

Once the structure is stable, the system runs a set of fast, rule-based checks focused on baseline quality and discoverability.

These aren’t subjective reviews. They’re simple, deterministic validations:

Is the content long enough to be meaningful?

Does it have a real section structure?

Are images present and reasonably balanced?

Are accessibility basics like alt text in place?

Are external references reasonable and not spammy?

Instead of a blunt pass/fail outcome, the system produces actionable recommendations. If something is missing or weak, the author gets clear feedback on what needs to be fixed.

This alone changed the moderation dynamic. Rejections stopped feeling arbitrary, and the process became far more collaborative and transparent.

Why This Step Comes First

Putting this gate at the very front of the pipeline was a deliberate decision.

Cost control — low-quality submissions never reach AI services

Predictability — deterministic rules reduce noise later on

Availability — the check runs instantly, regardless of time or backlog

Trust — only content that meets a basic quality bar moves forward

Most importantly, this step isolates risk. A broken submission can’t affect live content, and it can’t consume shared AI capacity.

Only after a draft passes this local gate does it move into the asynchronous, AI-driven part of the pipeline, where deeper analysis and classification take place.

Step 2: AI Semantic Eligibility Check (Relevance Before Intelligence)

Once a draft clears the local quality gate, it enters the first AI-driven stage of the workflow: semantic eligibility.

The question here is very simple: does this content actually belong on the platform?

At scale, bad content is rarely obvious spam. Much more often, it’s just off-topic. Posts can be well written, formatted correctly, and even SEO-aware — while still being completely misaligned with the platform’s purpose.

Letting that kind of content through slowly erodes topical authority and reader trust. This step exists to stop that from happening early.

Why Semantic Eligibility Comes First

Before asking an LLM to classify intent, detect promotion, or refine language, the system answers a more basic question:

Is this content actually about what SiteReq is about?

Running deeper AI analysis on content that doesn’t belong is wasteful. It increases cost, introduces noise, and makes later decisions harder. Semantic eligibility acts as a coarse but very effective filter.

How Eligibility Is Evaluated

Rather than relying on keywords or manual tagging, the system uses semantic similarity.

Each post version is converted into a vector representation that captures meaning, not just words. That vector is compared against a curated set of embeddings that define the platform’s topical boundaries.

This approach works well because it:

Handles paraphrasing and wording variations naturally

Catches subtle topic drift that keyword checks miss

Scales cleanly as content volume grows

Produces consistent decisions without human fatigue

The result is a similarity score that reflects how closely the content aligns with the platform’s domain.

Clear Decisions, No Grey Area

Unlike later stages that rely on generative reasoning, this step is intentionally deterministic.

There’s a clear threshold. If the content meets it, it proceeds. If it doesn’t, it’s rejected with a concrete reason.

There’s no partial approval and no ambiguity. That makes the system easier to reason about, easier to tune, and easier to explain to users when feedback is needed.

Why This Matters at Scale

This is exactly the kind of decision that manual moderation struggles with:

People get tired and inconsistent

Edge cases slip through quietly

Off-topic content often looks “fine” until damage is done

By enforcing semantic alignment early, the platform protects its topical authority, internal linking quality, reader expectations, and even the accuracy of downstream AI steps.

Isolation and Safety

As with every stage in the pipeline, semantic eligibility runs against an isolated draft version. A failed check doesn’t affect previously approved content, and a successful one doesn’t immediately touch live pages.

That isolation is what makes experimentation safe. Thresholds can be tuned, models can evolve, and the system can improve without putting production content at risk.

Only after content clears this step does it move forward to deeper intent analysis and refinement.

Step 3: Plagiarism Detection (Protecting Originality and Trust)

Once a post clears structural checks and is confirmed to be on-topic, the next thing I worry about is originality.

In practice, plagiarism at scale is almost never blatant copy-paste. It’s usually subtle: lightly paraphrased sections, recycled paragraphs from older posts, or content stitched together from multiple sources. On its own, a single case might not look catastrophic—but letting even a small percentage of that through adds up quickly.

Search engines notice. Readers notice. And once trust starts to slip, it’s hard to earn back.

This step exists to make sure that every approved post actually adds something new, rather than quietly borrowing value from somewhere else.

Why Originality Is Checked Before Any AI Refinement

Plagiarism checks are intentionally done before any generative AI touches the content.

If a post isn’t original, improving its wording or SEO doesn’t solve the problem—it makes it worse. Refinement can smooth out copied content just enough to make it harder to detect later, without changing the underlying issue.

By validating originality early, the system makes sure that:

Only legitimate content is enhanced further

Attribution problems surface immediately, not weeks later

Later AI steps operate on content that’s ethically sound

That ordering protects both the platform and the people publishing on it.

How Plagiarism Detection Fits Into the Pipeline

Each draft version is checked independently using a third-party plagiarism detection service. The result is treated as a hard gate.

If the content passes, it moves forward automatically

If it fails, it’s rejected with a clear explanation

There’s no soft scoring here and no partial approval. Originality isn’t something you negotiate on.

Fail-Fast, Isolated, and Safe

Like every other step in the workflow, plagiarism checks run against an isolated draft version.

A failed check doesn’t touch previously approved content, doesn’t block other submissions, and doesn’t require any manual cleanup. The system simply records the outcome and moves on.

That isolation matters, especially as detection providers or thresholds evolve over time. The pipeline stays stable even as individual components change.

Why This Matters on a Public Platform

Plagiarism isn’t just a legal or ethical concern—it’s a platform risk.

Unchecked reuse:

Slowly drags down search visibility across the domain

Breaks reader expectations

Undermines the credibility of authors who actually do original work

By making originality a non-negotiable requirement, the platform sets a clear expectation: bring original ideas, or don’t publish.

Only after content clears this step does it move into deeper intent analysis, where the questions become more nuanced.

Step 4: Promotional Abuse & Intent Detection

(From Automated Fine-Tuning to Few-Shot Control)

At this point in the pipeline, the content is clean, relevant, and original. What’s left is a much harder problem: intent.

Promotional abuse is rarely obvious. In real submissions, it shows up in small, easy-to-miss ways:

Soft hiring language hidden in “educational” posts

Backlinks that look harmless but clearly serve promotion

Contact invitations framed as help or collaboration

Social profile amplification woven into the narrative

These patterns are hard to capture with simple rules. Context matters, which is why this step relies on language models.

The First Attempt: Automated Fine-Tuning

My initial approach here was automated fine-tuning.

The thinking was straightforward: if the model sees enough examples of acceptable and unacceptable promotional behavior, it should get better over time without constant prompt tweaking.

From a purely technical standpoint, this worked. Detection accuracy was good, false positives dropped, and the model picked up on subtle abuse patterns.

What didn’t work was the cost.

Fine-tuning wasn’t just about training tokens. Keeping the fine-tuned model deployed meant paying a fixed hosting cost—roughly $68 per hour—whether there was traffic or not.

For a self-funded platform, that kind of always-on cost adds up fast. It wasn’t a theoretical concern. It was simply unsustainable.

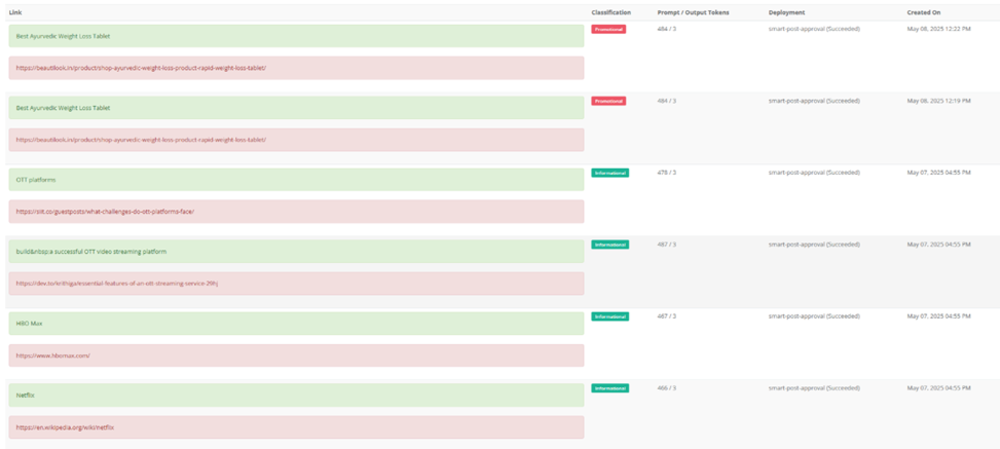

Rethinking the Problem: Few-Shot Prompting

Instead of forcing fine-tuning to fit the budget, I stepped back and looked at what the system actually needed.

The core requirement wasn’t a permanently trained model. It was controlled, adaptable decision guidance.

That realization led to a shift toward few-shot prompting.

Rather than baking behavior into the model itself, behavior moved into configuration:

A strict system prompt that defines non-negotiable rules

A structured user prompt so content is always presented consistently

Carefully curated examples that show what acceptable and unacceptable intent looks like

These rule sets are versioned, editable at runtime, isolated per capability, and reversible without redeployments.

And just as importantly, they don’t incur any hosting cost. Tokens are only consumed when the model is actually used.

Why Few-Shots Worked Better in Practice

Once this was running in production, the advantages were clear:

Lower cost — no hourly hosting fees

Faster iteration — behavior could be adjusted in minutes

Safer changes — updates only affected future decisions

Better explainability — decisions could be traced back to examples, not opaque weights

Fine-tuning wasn’t a mistake. It helped explore the problem space and understand the boundaries. But for long-term operation under real constraints, few-shot prompting turned out to be the more practical solution.

Only after intent is validated here does content move on to the final refinement stage—where AI is used to improve quality, not fix problems.

Step 5: AI-Driven Content Refinement (Improvement Without Distortion)

By the time a post reaches this stage, most of the hard problems are already behind it. The content is structurally sound, on-topic, original, and free of promotional abuse.

At this point, the goal isn’t validation anymore. It’s improvement.

This step exists to raise the overall quality of the content without changing what the author meant, what they were trying to say, or who owns the voice behind it.

Unlike earlier stages, refinement isn’t a gate. It never blocks publication on its own. Instead, it focuses on making good content a bit clearer, easier to read, and more consistent across the platform.

Why Refinement Comes Last

I deliberately pushed refinement to the very end of the pipeline.

Running generative improvements earlier would have created more problems than it solved:

Spending tokens on content that might still be rejected later

Accidentally masking issues like plagiarism or subtle promotion

Introducing variability before trust was fully established

By deferring refinement until everything else is settled, every token spent here is additive. Nothing is being “fixed” — it’s simply being polished.

What Gets Refined (And What Doesn’t)

The refinement step is intentionally conservative. It’s not about creative rewriting or stylistic experimentation.

In practice, it focuses on small, measurable improvements:

Smoothing sentence structure and grammar

Improving paragraph flow and readability

Light SEO improvements through clearer phrasing

Normalizing tone so content feels consistent across the platform

Just as important are the things the model is explicitly told not to do:

Don’t change the original meaning

Don’t add new facts or claims

Don’t insert links, promotions, or calls to action

Don’t touch technical accuracy

Those constraints are what make refinement predictable and safe, even when it runs at scale.

Guided AI, Not Creative Freedom

As with earlier AI steps, refinement is tightly guided.

The system doesn’t ask the model to “rewrite” content freely. Instead, it operates within clear boundaries:

Explicit system-level constraints

A structured input format

Concrete examples of acceptable and unacceptable changes

This keeps the output aligned with platform standards and avoids slow stylistic drift over time.

Isolation and Trust

Refinement always runs on an isolated draft version. The live post remains untouched until the refined version is explicitly approved.

If the output isn’t satisfactory, there are simple options: rerun refinement with adjusted rules, or skip refinement entirely and approve the content as-is.

Nothing is overwritten silently. Nothing is irreversible.

Why This Step Matters

At scale, consistency becomes a form of quality.

Small improvements applied consistently across many posts compound into a noticeably better reader experience, stronger search trust, and clearer topical authority.

Most importantly, this step reinforces a core principle of the platform: AI is there to support authors, not replace them.

Once refinement is complete, the post is ready to be published with confidence.

Step 6: Semantic Similarity Search (Contextual Linking at Scale)

After a post is refined and approved, the focus shifts away from correctness and toward context.

Content rarely delivers its full value in isolation. Readers don’t just read a single post and leave — they explore topics. Search engines reward platforms that demonstrate depth and meaningful internal connections.

This step ensures that every approved post becomes part of a broader, connected knowledge graph rather than a standalone page.

Why Semantic Linking Matters

Manual internal linking doesn’t scale well. It’s slow, inconsistent, and heavily dependent on individual judgment. As the content library grows, it usually gets worse, not better.

Instead of relying only on categories or tags, the system uses semantic similarity to identify posts that are genuinely related in meaning — not just by shared keywords.

How Similarity Is Used

Each approved post is represented as a semantic vector that captures what the content is actually about. That vector is compared against embeddings of existing published posts.

From there, the system identifies the most relevant related posts, while applying simple safety constraints:

Exclude the current post version

Avoid circular or redundant relationships

Preserve topical diversity

These relationships are stored and used to suggest contextual internal links, improve discoverability, and increase session depth.

Because the process is semantic rather than lexical, it works even when posts use very different wording to discuss the same idea.

Additive, Not Intrusive

Semantic linking is intentionally additive.

It doesn’t rewrite content, inject links automatically, or override editorial intent. Instead, it provides structured recommendations that can be surfaced consistently across the platform.

This keeps the system safe:

Existing content isn’t destabilized

New content strengthens the overall ecosystem

Linking logic remains transparent and reversible

Compounding Value Over Time

As the content library grows, this step becomes more effective, not less.

Linking accuracy improves

Topic clusters become denser

Discoverability increases naturally

Search engines see stronger topical authority

Most importantly, readers are guided toward relevant material in a natural way — through context, not aggressive recommendation.

At this point, the post isn’t just approved. It’s integrated.

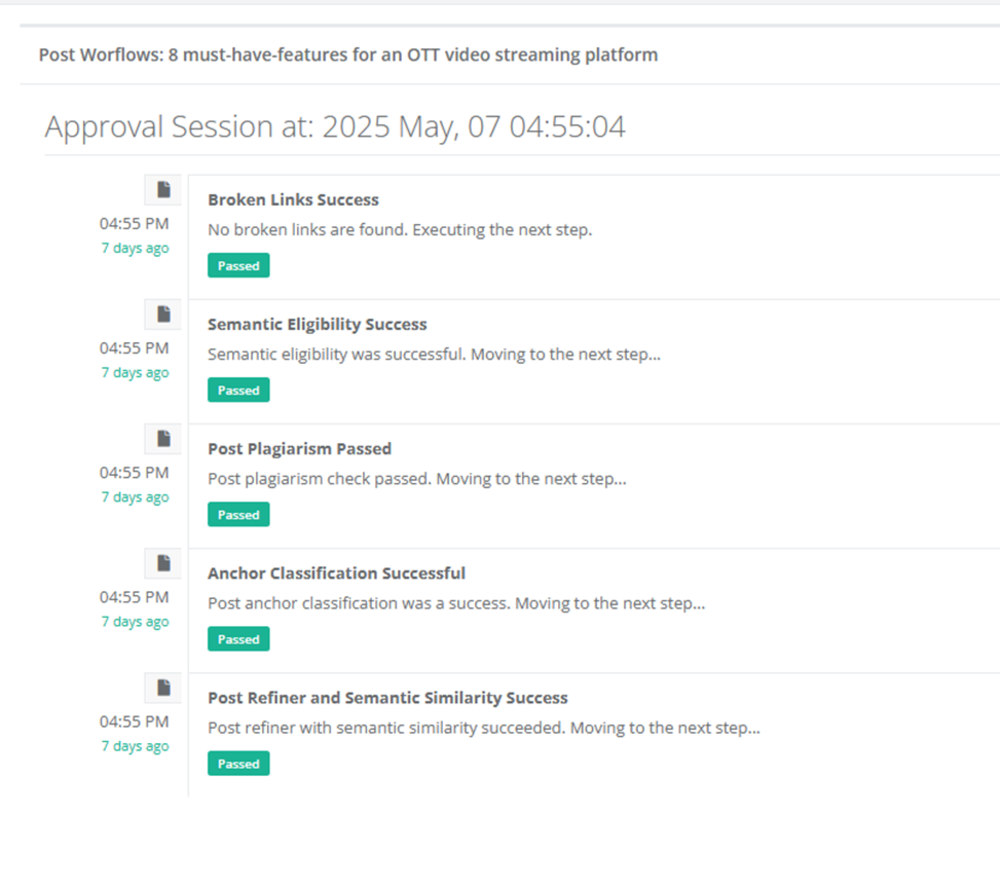

Auditing the Entire Workflow

Auditing was a deliberate part of the design, not an afterthought. Every approval step leaves behind a clear, time-stamped trail that shows exactly what happened, in what order, and why a post was allowed to move forward.

From the admin side, I can see each stage of the workflow — broken links, semantic eligibility, plagiarism, anchor classification, refinement, and similarity — with an explicit success or failure state.

This matters because automated systems are only trustworthy if their decisions are inspectable. When something goes wrong, I don’t have to guess which model ran, which rule applied, or where a decision stalled.

And when everything goes right, the audit log becomes just as valuable: it provides confidence that approvals weren’t silent, accidental, or opaque. In practice, this audit trail turned automation from a black box into something I could reason about, debug, and trust — even weeks after a post was approved.

Conclusion: Building AI Systems That Survive Reality

Moderating content at scale turns out not to be an AI problem as much as it is a systems problem.

Models evolve. Costs change. Edge cases pile up. Traffic is uneven. Human availability is limited. Any solution that assumes ideal conditions will eventually break in production.

This project was built around a simpler assumption: change is unavoidable.

That assumption shaped every architectural decision:

Version isolation to protect availability

Deterministic checks before probabilistic reasoning

AI behavior treated as configuration, not code

Experimentation designed to be reversible and observable

Fine-tuning evaluated honestly and replaced when it stopped making sense

The result isn’t a “smart” system in the abstract sense. It’s a controllable one — a system that can improve over time without breaking trust, exceeding budget, or requiring constant human oversight.

Automation didn’t remove responsibility. It concentrated it. Decisions became explicit. Tradeoffs became measurable. Failure modes became isolated instead of catastrophic.

Most importantly, readers consistently see stable, high-quality content, and contributors get predictable, explainable outcomes.

The same architecture can extend beyond blogging — into reviews, comments, ads, or any other form of user-generated content. The tools may change, but the constraints won’t.

The hardest part of building AI systems isn’t choosing a model. It’s designing something that keeps working when the model, the budget, and the assumptions all change.

That’s the problem this project set out to solve — and the one it continues to solve in production.

Read Next

The following articles are related to how i built a versioned, non-disruptive ai approval pipeline.